1 人机情感交互领域研究回眸

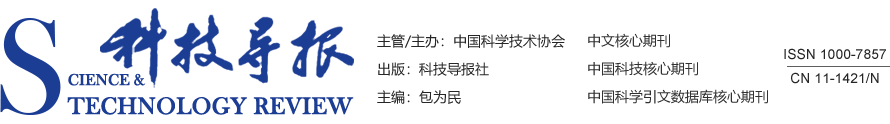

1.1 交互理论重要进展

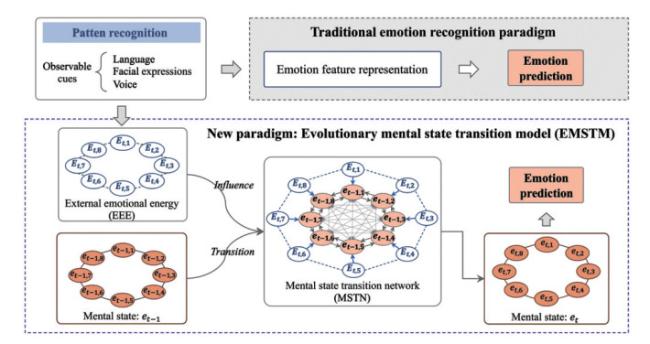

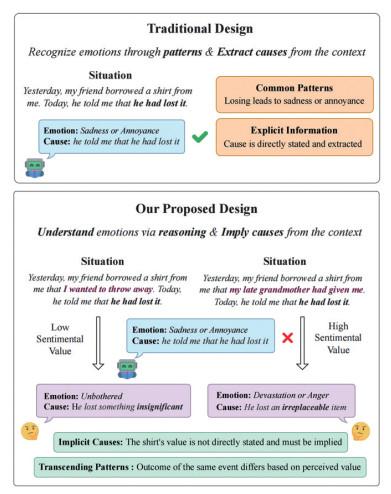

1.2 情感认知

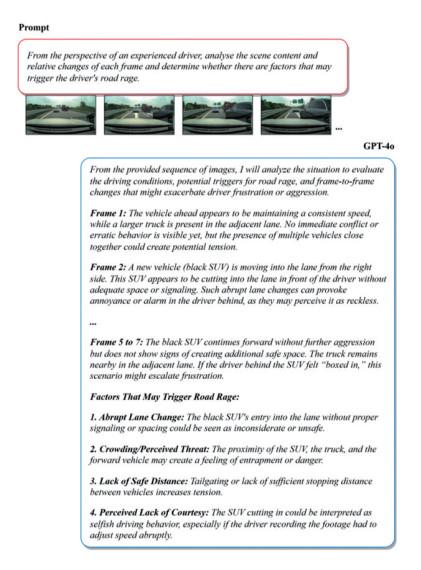

1.3 情感调节

1.4 情感交互能力评测

2 人机情感交互领域研究挑战

表1 多模态大模型在情感交互领域2024年度研究回眸 |

| 研究方向 | 研究内容 |

| 情感认知 | Xing[38]提出了一种用于金融情感分析领域的具有异构LLM代理的设计框架,使用语言学和金融学的先验指导知识实例化多个异构专业代理,并通过聚合代理进行总结 Venerito等[39]提出了一种用于诊断纤维肌痛病症的LLM驱动情感分析方法,通过检测能够反映纤维肌痛相关的中枢感知与负面影响的语言和情感线索,有效地辅助临床医学诊断 Hellwig等[40]探索了LLM在细粒度方面级情感分析的数据标签生成能力,在低资源场景下,合成数据增强在方面级类别情感分析上表现出了显著增益 |

| 情感调节 | Na[51]提出了专门为认知行为治疗技术设计的大型语言模型CBT-LLM模型,擅长在心理健康支持任务中生成结构化、专业化和高度相关的回复 情感引导多模态对话模型[52]将整体框架分解成情绪检索模块、情绪反应预测模块和情绪增强的反应生成模块,通过两阶段训练策略来理解多模态信息背后的微妙情绪,生成更加细粒度的情感对话 Llanes-Jurado等[55]创建了一个基于大语言模型的半引导对话的实时对话虚拟人,融合了实时语音合成、实时声唇同步、实时面部表情生成、大语言模型驱动的对话系统,能够有效激发人类情绪 |

| 情感交互能力测评 | EmoBench是首个专门用于评估多模态大模型在5种主流情感任务中的情感交互能力的综合基准,涵盖了通用情感任务和情感应用任务[61] FOFO是首个将LLM的指令遵循分为内容遵循和格式遵循,并对格式遵循能力进行评估的基准,通过人工和AI协作的三步走策略,构建了涵盖多个领域和数据格式的复杂分层结构布局[62] Mizrahi等[63]深入研究了单提示评估的脆弱性,提出了一组基于多指令改写的多样化指标,有助于促进LLM评估工作的一致性和可比性 |